Upcoming Events

Tue, Apr 2nd: 🧠 GenAI Collective 🧠 Research Roundtable 🧑🔬

Wed, Apr 10th: 🧠 GenAI Collective NYC 🧠 AI & Fintech Innovators Soiree

Wed, Apr 17th: 🧠 GenAI Collective x Girls in Tech 🙍♀️ Diversity and Equity in Tech

Fri, Apr 19th: The AI Rabbit Hole

Tue, Apr 23rd: 🧠 GenAI Collective 🧠 Marin Climate Hike

🗓️ Hungry for even more AI events? Check out SF IRL, MLOps SF, or Cerebral Valley’s spreadsheet!

If you didn’t get a chance to listen to our most recent episode of the Collective Intelligence Community Podcast, go listen to our very own Thomas Joshi’s interview with Shane Orlick, President of the AI platform for marketers Jasper. They dive into topics from how AI is reigniting creativity and execution in technology and how AI ethics and governance is key for widespread adoption.

Are AI Agents Coming for Your Job?

In September 2023, OpenAI made headlines after releasing the GPT Store, a marketplace for users to build and access thousands of custom GPTs (versions of ChatGPT with custom instructions and access to a particular set of data and tools). This development sparked a vision of the future where users could easily choose from a wide-ranging catalog of highly customized LLM copilots. However, the viral release of the autonomous AI software engineer Devin developed by Cognition gave the ecosystem a glimpse into the next phase of Generative AI–autonomous agents.

Devin was one of the first examples of how quickly we’re crossing the copilot phase. The agent was able to complete complex tasks from real job postings on Upwork, meet expectations on coding interviews at top tech brands, and even address open GitHub issues–all without any human assistance. Cognition’s early design partners are sharing their experiences using Devin, too – check out Chappy and Cofactory’s review on LinkedIn!

Although Devin’s technical abilities have not been published or evaluated in greater depth just yet, the agent’s ability to learn unfamiliar technologies and solve an agnostic set of software applications, while learning and iterating on past mistakes, highlighted just how fast the industry is moving. MarketDigits estimated the Autonomous Agents market to be $4.5B+ in 2023 but is expected to reach $64B+ by 2023 growing at a 45% CAGR.

Maturing from conversational AI to LLM agents

The first generation of conversational AI was dominated by end-to-end solutions and frameworks that had limited scope and NLP model performance–traditionally built in-house and a key differentiator among leading providers. Companies like Aisera or Freshworks built end-to-end solutions fine–tuned for particular enterprise functions such as IT or HR tickets, while chatbot frameworks like Kore AI or IBM Watson provided greater flexibility but longer implementation time for specific enterprise use cases.

In early 2023, new LLM-native frameworks started to gain viral adoption. Developers wanted tools that could leverage LLMs as the “brain” and vector stores as the “memory” to break down an agnostic set of applications into the constituent subtasks without human assistance. Open-source projects like BabyAGI and AutoGPT as well as emerging startups like LangChain, LlamaIndex, Haystack, and Fixie developed SDKs for engineers to build and deploy autonomous agents while abstracting out the core components (memory, planning, tool integration). These frameworks allowed developers to build apps on top of foundation models for specific, complex, workflows that weren’t bound by a specific model or application. LangChain and others have recently rolled out additional enterprise features for observability (LangSmith) and deployment (LangServe), which have been key barriers for rolling out production agents at scale.

Defining autonomous agents

An LLM agent is an autonomous, goal-seeking system that uses an LLM as its central reasoning engine and typically has 4 key components:

Agent Core is the central coordination and decision-making module that defines the behavioral characteristics of the agent. This is where LLMs such as GPT-4 sit to define agent goals, coordinate subtasks, maintain instructions on how to use enterprise tools, and maintain module instructions.

Memory Module is the component that stores the agent’s internal logs and user interactions across the short- and long-term—typically leveraging an external vector store for fast and scalable retrieval. Memory is necessary for retrieving specific information from subtasks and iterating on the feedback from previous tasks or actions.

Planning Module is aware of all resources in use, and can understand how to allocate those resources effectively. The current baseline for LLM planning is called Chain-of-Thought. Instead of just asking the model what to do, it’s prompted to think step-by-step, which helps the model more effectively decompose complex tasks to smaller, simpler and more atomic steps. This can be further improved with self-reflection, where the model has access to its past actions and can correct its previous mistakes.

Agent Tools are external tools or applications that agents can use to execute subtasks (e.g. code interpreter, knowledge base, RAG pipeline). These tools can be thought of like APIs for traditional software developers and are used to extend the capabilities of the autonomous system.

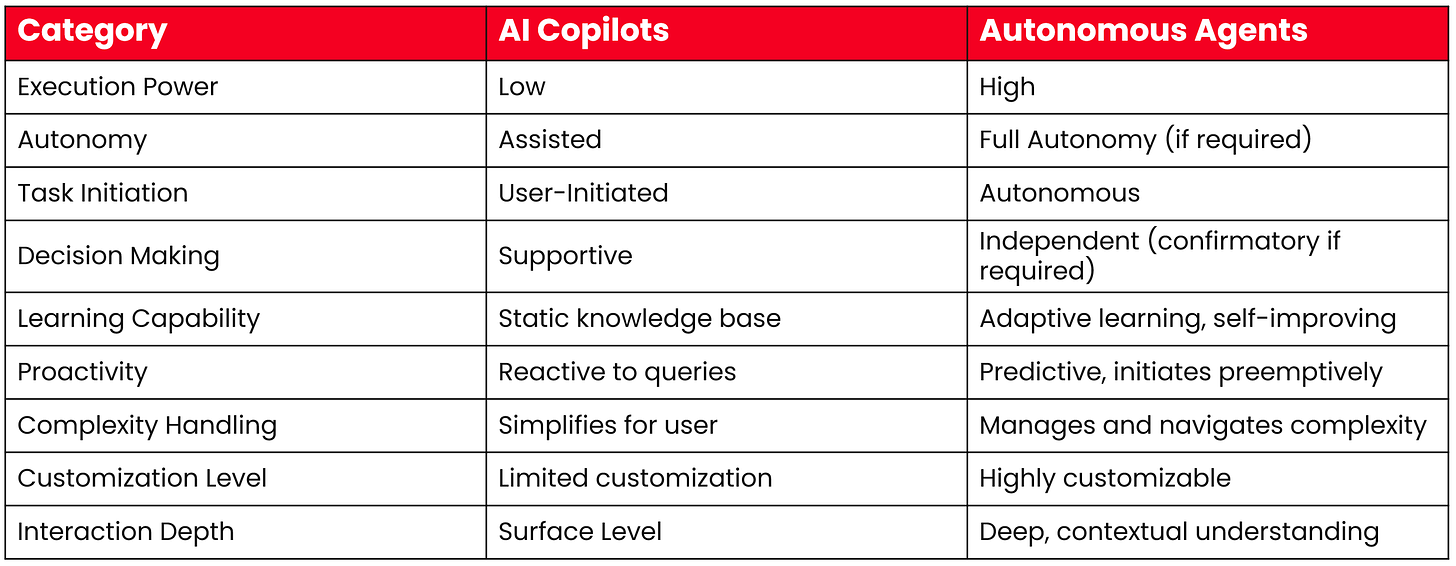

Amit Lal, a principal architect at Microsoft, created an informative visual that breaks down the key differences between autonomous agents and copilots:

The future of autonomous agents

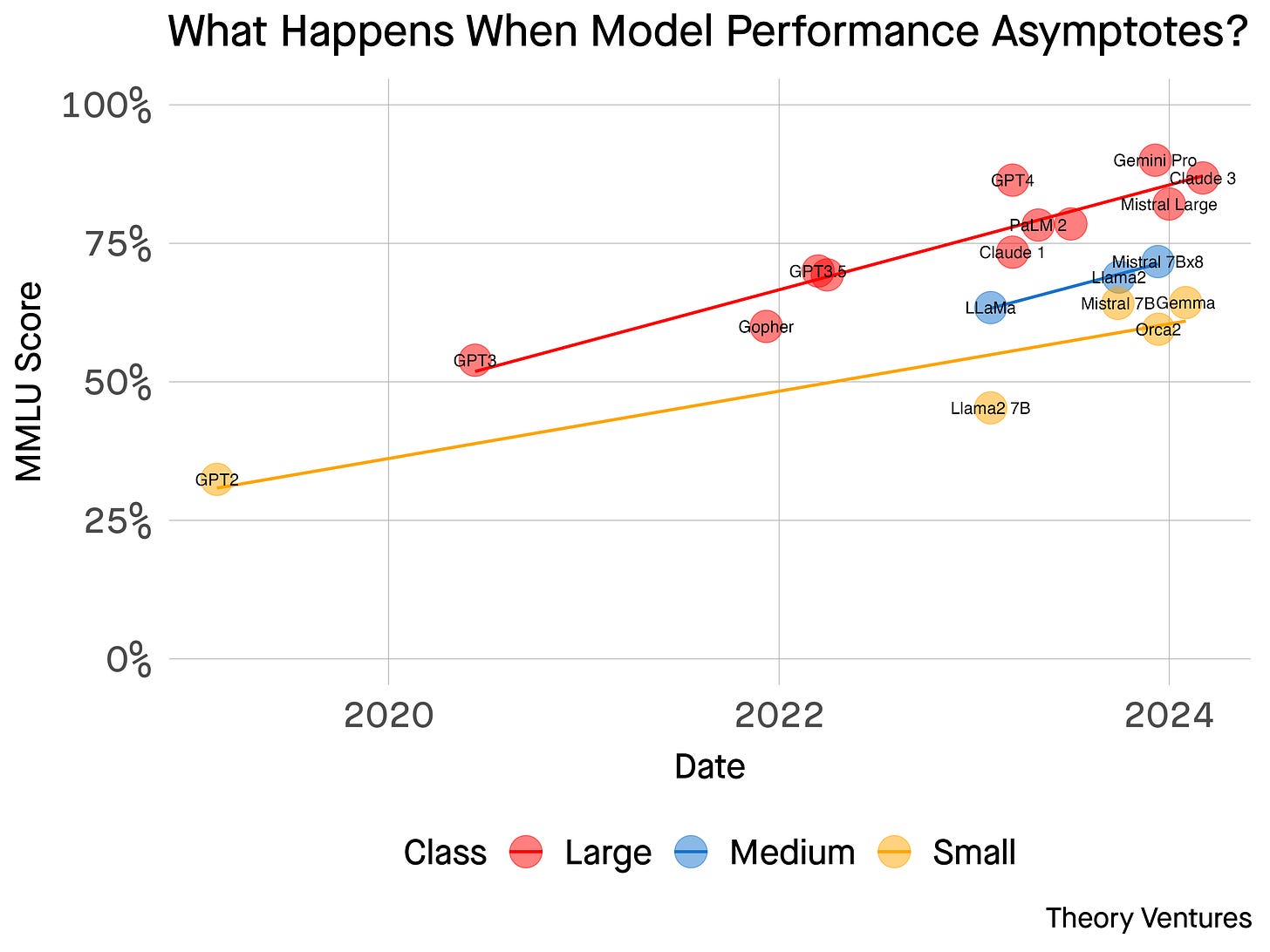

So, what does all of this mean for the future of software, jobs, and Generative AI? Well, the industry has already studied the profound productivity gains of copilots integrated with the current batch of best-in-class foundation models—Github has released studies showing 50%+ productivity gains among developers and ServiceNow has communicated 60-80% increases. As foundation models continue to scale performance to near (or even surpass) human benchmarks and agent frameworks unlock faster time-to-value for autonomous agent development, the future of work will fundamentally change. Today, the highest performing models are 90% as capable as a high school student (the MMLU benchmark for AI).

In the next 2-3 years, we may begin to see autonomous agents displacing a significant number of knowledge workers across departments that historically required human intelligence and decision-making, including data science, RevOps, strategy, design, and even some aspects of management. Knowledge workers will have to find ways to work alongside autonomous agents and continue to develop skill sets in areas that are the most difficult for autonomous systems to automate. Sharing some additional predictions below:

Today—CS & CX functions at large enterprises will be drastically reduced—customers today expect the highest levels of service and see these functions as a key differentiator driving buying decisions. However, autonomous agents are proving their ability to handle the most complex human interactions without sacrificing service performance. In February 2024, Klarna publicly announced that their autonomous agent, developed in partnership with OpenAI, already handles two-thirds of all customer service chats.

2-5 years—Selling software products will transform into selling AI agents—as verticalized autonomous agents are deployed across enterprise functions, SaaS companies will sell bundled autonomous AI agents that can complete the complex tasks knowledge workers perform today. ServiceNow has seen customers decrease internal teams by 50% due to the generative features they’ve rolled out. There are parallels here to how robotics has changed the operating model of the warehouse and factory.

10+ years—There will be a Fortune 500 company with less than 100 employees—A couple months ago, Sam Altman decried that AI will allow a solo entrepreneur to build a billion-dollar company. Although this may be years away from reality, the proliferation of verticalized autonomous agents will allow much smaller teams to shift resources from business as usual operations to the highest value tasks.

With that, I hope you enjoyed the newsletter and would love to hear how the community sees autonomous agents reimagining the future of work. If you would like to share your thoughts, hit up Eric on the community Slack, or reach out via email at eric@gaicollective.com for a feature in our next newsletter! I am also excited to announce that we are looking for one of the best and brightest to join the newsletter as a content intern. Reach out if you’re interested in joining!

Events Recap

The Daily AI & Voice Summit was a blast! The incredible demos were the highlight, and we are looking forward to adding more demos to upcoming events. Here's to more warmth, dialogue, and innovation in the AI community! 🤩

Value Board

Come work with us!

Over the last 2 years, we’ve seen our community grow from 3 close friends to 10,000+ members scattered across the globe. This week, we’re excited to announce that we are growing the team and looking to bring on an event coordinator who shares our vision of using in-person events as a catalyst to define the future of AI! Check out the role description below and reach out to hello@gaicollective.com if you’re interested in joining us!

Are you passionate about AI, meticulous about details, and a wizard at organizing events? The GenAI Collective is on the lookout for an Events Coordinator to bring our exciting events to life! This is your chance to dive deep into the AI community, enrich your network, and showcase your skills. If you’re ambitious, organized, and ready to make a splash in the AI scene, we want to hear from you. Let’s create unforgettable events together!

About Eric Fett

Eric joined The GenAI Collective to lead the development of the newsletter and online presence. He is currently an investor at NGP Capital where he focuses on Series A/B investments across enterprise AI, cybersecurity, and industrial technology. He’s passionate about working with early-stage visionaries on their quest to create a better future. When not working, you can find him on a soccer field or at a sushi bar! 🍣

Welcome aboard Eric! :)